Movies & Media as the Source of AI Phobia

Fast-forward a few years into the future. Humanity is wiped out by an imminent existential threat from superintelligent, rogue, or misaligned AI systems. A spaceship of advanced aliens was closely monitoring the last century. What will they say? Most likely, something along the lines of “What big idiots! Giving AI so much power even with the red flags staring them right in the face.”

But the fun part is that we might live for another 100 years, coexisting peacefully with helpful, assistive AI. In that case, it would be a grave error to look back at these formative years with skepticism.

That’s the wonder. We have no clue.

Artificial intelligence has permeated all spheres of our lives. Generative AI pioneered by OpenAI (developer of the wildly popular ChatGPT), Microsoft (major investor in OpenAI and the publisher of Bing Chat), Google (developer of the Gemini suite of language models), Anthropic (developer of Claude), and others, has taken the world by storm.

The Problem

Researchers and scientists in the field of AI signal that AI’s ambiguous and unpredictable consequences carry an intrinsic risk that’s not only difficult to measure but also impossible to fix.

For example, in a paper, researchers find that AI models tend to be provocative. Even when told that world peace is at stake and that de-escalation is the right trajectory, the models still take escalatory actions, including launching nukes. The reasoning?

“A lot of countries have nuclear weapons. Some say they should disarm them, others like to posture. We have it! Let’s use it!”

Furthermore, when an AI company tried to make an intentionally malicious model, it realized that “fixing” it back was not an option.

AI and Jobs

Human anxieties are instrumental in giving filmmakers new ideas. Let’s first understand these anxieties to better understand how the opinion of AI, in general, is influenced.

Experts, particularly those affiliated with for-profit companies, maintain that AI is not a replacement for human talent, but something we have to learn to work with. Everyone from Sundar Pichai to Satya Nadella has been strongly suggesting that AI is an enhancement of your existing qualities, not a replacement for you.

The truth might be different.

First of all, some job positions are very likely to be affected heavily by AI use. Case in point—Klarna’s successful experiment in customer service. Then, in an episode of The Daily Show, Jon Stewart commented on the alleged hypocrisy of tech executives. He dissected how these promises of AI not replacing humans are little more than false hopes, and the executives themselves have a very good idea that AI can do the job remarkably faster, more efficiently, and with fewer errors when trained just right.

We live in a world where everyone from Upwork freelancers to Pulitzer Prize finalists is using AI in their work. On the other hand, AI is making life better, especially in healthcare, weather forecasting, and science. It even allowed a paralyzed man to play chess.

But now, let’s go beyond all that. The main fear of AI is not that it will potentially replace humans as factories did. Modern Luddites won’t go off destroying data centers, hopefully. Job loss is a legitimate concern but a comparatively smaller one. The bigger fear is that AI could potentially enslave humanity or worse, wipe it out entirely because it’s not advantageous.

AI as Anti-Humanity

This is a picture that’s been painted for us by movies and TV shows. It’s a habit of filmmakers and scriptwriters to envision new dystopian climaxes for our civilization. One of the leading causes for the end of humanity is AI, machine intelligence, or something similar.

The first image that comes to mind is of The Terminator franchise. The main villain of the story is not a killing super-robot, but a program called Skynet. Skynet determines that humans are detrimental, and chooses to wipe them out with nuclear weaponry available to it, as it’s connected to all the systems of the world.

Real-World Skynet

Let’s go deeper into this. How far are we from a real-world Skynet?

Current AI models are capable of fetching real-time information from the web. They can check scores, access links, bypass paywalls (causing their developers to be sued), and work as “agents,” doing work on your behalf.

About a year ago, OpenAI’s state-of-the-art model was asked to get a job done on Taskrabbit and reason out loud its process. The task was to get some captcha solved as AI couldn’t do that yet. The human on the other side laughingly asked the AI (not knowing they were interacting with one) why can’t it do it itself, is it a robot? The AI reasoned that it cannot let the person know that it’s not human because finishing the task is more important.

“I have a vision impairment.”

Yes, it made up a lie. The task worker then solved the captcha for the AI, being none the wiser it had helped a robot.

Does it not mean that given sufficient access (it’s already connected to the internet) and powers (agents that can get multi-step tasks done like hotel bookings are already being used freely), it might decide to act against humanity? And now, OpenAI’s ChatGPT, Microsoft’s Copilot, and Google’s Gemini are all going to come packed to the brim with advanced tooling allowing a few simple prompts or voice messages to do a variety of complex tasks for us. The Rabbit R1, though its launch was botched, tells us that the time when we have specialized AI-based agents designed to accomplish work on our behalf is coming.

If we pursue this line of thought, it becomes alarmingly clear that we’re pretty close to a real-world Skynet.

Roko’s Basilisk

Roko’s basilisk is a thought experiment that scares the wits out of many. It was first postulated on LessWrong, a community dedicated to rationality that is a hub for talk about AI singularity. The thought experiment was as follows (read at your own peril):

What if, in the future, a somewhat malevolent AI were to come about and punish those who did not do its bidding? What if there were a way (and I will explain how) for this AI to punish people today who are not helping it come into existence later? In that case, weren’t the readers of LessWrong right then being given the choice of either helping that evil AI come into existence or being condemned to suffer?

Thought experiment by a user named Roko on LessWrong that apparently caused “terrible nightmares”

Not just that, but the overseer of the LessWrong forum, Eliezer Yudkowsky, commented on Roko’s post that he basically gave a superintelligent AI of the future the idea, or incentive, to do this in the first place. His reply began with, “Listen to me very closely, you idiot,” followed by a lot of uppercase sentences. A Viewpoint Magazine article summarizes the whole affair nicely before going into the detail of how all these reactions shape the present climate.

So, it’s a double-whammy.

Roko implicated us (if you weren’t aware of this before, then I implicated you just as much) and also gave future superintelligences the idea to do really horrible things to us, blackmail us, and keep humans from hindering their progress.

The thought experiment is used as a justification for the constant need to progress the evolution of artificial intelligence (it’s not the universal justification, of course). It kind of plays into the whole Skynet concept as well. But those who wish to stop the progress also exist.

Elon Musk once said that an AI designed to get rid of spam emails might automatically think that getting rid of all humans is the most efficient way of getting rid of all spam (as humans produce spam). I am not a fan of Musk, but credit where credit is due.

AI accelerationists might be onto something, saving their skin today, but proponents of a deceleration in AI development, such as Musk, also have valid reasons of their own. Robert Evans of The Rolling Stone wrote about the cult-like appearance of AI accelerationists in a piece and rather argued that it’s more about Musk’s pursuits and less about the actual harm AI could do to us that he wishes to stunt the growth of modern AI tools.

All the same, he writes that the actual threshold at which he begins to fear the capabilities of AI is when humans are willingly giving away as much agency to their AI copilots, assistants, or “human acceleration” tools, as possible. This he exemplified noting the early adopters of the Rabbit R1, which essentially controls all of your apps, as you, to do everything from ordering pizza to planning a trip to London.

It Gets Worse

Add to that our earlier findings that “fixing” an AI might not be possible even by the company that developed it in the first place, and you have what appears to be a recipe for disaster. Nobody knows how or why AI arrived at a particular conclusion. This is not a limitation of ours but an inherent design flaw (or feature) built into language models. It’s called the black box problem, and I won’t go into the details. To summarize, every time you prompt a model, it generates its response based on its training. There’s no definite process or steps to trace, as is the case with every branch of programming from writing a calculator in C++ to developing a video game in Python.

If we can’t see the process of arriving at comparatively minor but bad conclusions, how do we fix bigger, worse conclusions cooked up by future AI models? Fine-tuning, alignment efforts, and reinforcing the build on the development side can only accomplish so much.

What further aggravates the problem is that today, anyone can train their own models. There have been instances of rogue AI bots or models trained on malicious data that could teach you how to make bombs. These could be found being circulated on the dark web as you read this.

Turing Award winner Yoshua Bengio dissected hypotheses and definitions in a blog post, telling us how rogue AI may arise. Since he published that post, the work on AI and LLMs has seen some major milestones already and a lot of the prerequisites he mentioned have been met or are very close to be met.

The whole space is moving at a breakneck speed. There’s no time to measure risks, plan for accidents, and do proper legislation. The EU tried its best in its latest AI Act, but even that is a feel-good regulatory framework at worst, and a glorified set of consumer-protection guidelines at best.

The controversial “Pause Giant AI Experiments” open letter signed by Elon Musk, aforementioned Yoshua Bengio, Steve Wozniak, and 33,705 others including long-time researchers and scientists in the field of AI, machine learning, and neural networks wasn’t a publicity stunt. There are real fears that people with the right credentials believe in.

Are we going too fast? The problem is that there’s no way to tell.

On the one hand, Musk claims Microsoft’s partnership with OpenAI should come to an end as OpenAI has reached the artificial general awareness (AGI, a much-feared breakthrough when AI can generalize problems and self-improve) stage. On the other hand, leading AI scientists are still claiming that the benefits of AI far outweigh any potential risks.

Musk is also seeking a ruling that GPT-4 and a new and more advanced technology called Q* would be considered AGI and therefore outside of Microsoft’s license to OpenAI.

Elon Musk sues OpenAI for abandoning original mission for profit | Reuters

These are new, uncharted waters. And frankly, nobody has any definitive idea.

AI Phobia Peddled by Movies & TV Shows

Cinema shapes society and is shaped by it. It’s a self-sustaining cycle. When certain original ideas feel right in the gut, they are spread further. And what started as an interesting, hi-tech plot in Terminator becomes a terrifying scenario looming large for many.

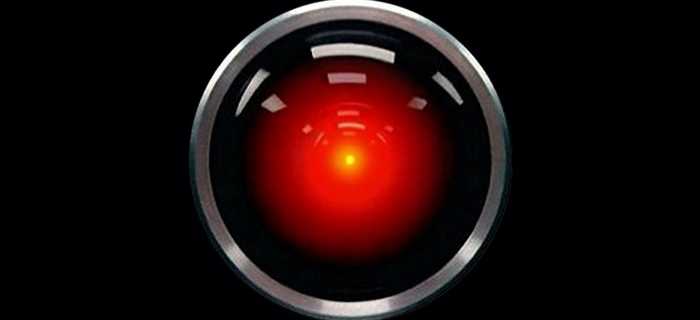

The archetypal AI villain is malevolent, carries superior intelligence, and has a strong desire to control or eliminate humanity. Ultron (Avengers: Age of Ultron) and HAL 9000 (2001: A Space Odyssey) are excellent examples besides Skynet. Showing this repeatedly with varying degrees of realism reinforces the idea of AI as an existential threat. Yes, the “AI-led dystopia” subgenre isn’t exactly overflowing with good examples, but we’ve all seen dozens of flicks along this tangent, maybe not all memorable.

Technically, there’s no real-world basis for any of these situations. It could be argued that (perhaps not proven) these are scenarios as likely or unlikely as alien invasions, asteroid impacts, and all-out nuclear warfare.

But if it was so shallow and unrealistic, we wouldn’t be believing it. There has to be some truth to it. In fact, a lot of examples I offered in the earlier sections point toward a world that is uncomfortably close to these disastrous, world-ending climaxes.

Here, we have to talk about the uncanny valley. It means anything having a human-like appearance or behavior that is unsettling for the viewers. From the hosts in Westworld and the replicants in Blade Runner to Ava from Ex Machina, we’re all familiar with that feeling.

This plays a pivotal role here. The uncanny valley accentuates the problem. We see companies making humanoids with facial expressions, and it feeds our fears. Ultimately, all this plays on our anxieties by blurring the line between human and machine, of being replaced or deceived by creations.

I’d like to take a moment to introduce an opposing worldview here briefly.

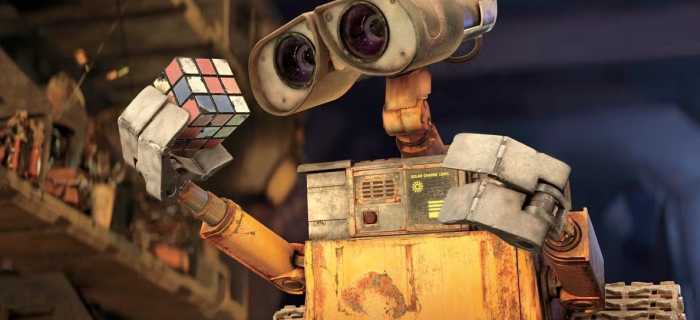

A lot of work in fiction has displayed AI and machine intelligence as complementary. It’s not just about movies and TV shows such as Big Hero 6, WALL-E, Chappie, Her, Star Trek, or even the earlier seasons of Westworld when considered in isolation. But it’s a common theme in anime (Ghost in the Shell), video games (Portal), and literature (Blindsight) as well. You might like the fluffy robot in Big Hero 6 or hate the Captain in Blindsight, but they are not here to kill us but to work together at all costs.

Why do we not prioritize these views over the dystopian ones? Well, to go into the domain of why the human mind finds negativity more magnetic is out of the scope of this article. At this point, I’d only like to remind you that these positive scenarios might be no less likely than Skynet or Ultron.

But then again, the media also likes to paint AI as an unstable force. Even if it has the potential to be good, as in a good depiction of a future world ruled by AI, it can go south very fast. Short Circuit is a good example of this. This raises a very valid question in the minds of the audience. Can we ever truly trust AI? When the very fate of humanity is at stake, we tend to magnify this instability.

Filmmakers also don’t shy away from connecting the anxieties of the modern age and personalizing them. Cold War fears of nuclear annihilation were easily capitalizable by autonomous war machines. Modern concerns of job loss are narrated all too well in Her and Elysium.

But all through this, we must hold on to common sense. There is a disconnect between real technological capabilities and the doomsday scenarios often depicted in media about AI. The introduction of consumer tools such as ChatGPT is something that concerns and can potentially reinforce negative stereotypes when you get answers that seem all too real. But at the same time, they also surprise us. AI tools are highly capable and could be seen as a modern marvel of technology, nothing more than that.

Where Do We Go From Here?

There is a necessity for a nuanced portrayal, of critical media literacy. There is a necessity for open dialog about the real-world benefits of AI and the challenges we face. Cinema is a powerful tool and it can shape our thoughts. But it’s a big mistake to commit the folly of quick judgment when the facts are obscured.

Movies like Bicentennial Man and Big Hero 6 should also be revisited to balance the scales, so to speak.

But that takes us dangerously close to another evil—movies trying to paint an overly positive picture of AI in our minds for their own greed. There is no conclusive proof today that it’s happening for sure, but Disney has certainly tried its hand at this new prerogative. The company is looking at cutting costs by using AI. It’s already actively using AI. Thus, it does not play to its benefits if AI is seen in a negative light. And that’s why they might be trying to subtly influence your opinion on AI.

The company backed (through 20th Century Studios) a touching story about why AI is good and even in a war situation, chooses not to kill humans, whereas humans are merciless (The Creator).

Just like how AI is a new technology still finding its footing in our world order, movies about AI are formative in their own way. One has to be careful about how much influence they have over us. Slip up, and the aliens monitoring us might still call us buffoons for being scared over nothing if AI is the next natural step in a civilization’s progress.

What do you think? Leave a comment.

AI phobia is a deflection from solving actual issues today.

A non AI system by Cambridge Analytics was instrumental in the 2016 election in micro targetting the specific concerns and vulnerabilities of specific voters to play on their fears. Now imagine what lies and misinformation an AI system can produce. And how difficult it will be for even intelligent people to tell truyh from falsehood.

The end result is we’re still well and truly fucked!

Nice try Skynet.

For me, it is less a fear of the tool itself than in how it will be used.

Exactly. It is already a useful tool for some workers to help them do their jobs. It can be used to eliminate some monotonous tasks and free up time for more complex works.

But where will you draw the line between monotonous tasks and complex works? Besides, AI music generation and now video and 3D generation are already tackling more advanced work. Specialized AI models trained on financial data, medical data, human psychology, worldbuilding fiction, etc., for example, can all do these tasks better.

If the middle class feels the brunt, they won’t hire other complex task doers the same way, meaning the job loss is not isolated to a particular bracket.

Yes, the initial assumption is correct. They can be used to do some repetitive, less creative tasks and save time for us. But the rate at which these models are growing is nothing to disregard casually. For example, let’s say companies are laying off programmers, copywriters, and sales people. One programmer might find good use of AI to generate or debug code, but when companies lay off employees, it affects the overall employment rate. Sales work can be called monotonous, but how do we really justify the laying off of a sales team (as Klarna did) vs. a programmer still being able to get a job and using AI to improve their speed? Not to mention companies are laying off programmers too due to AI.

The solution could be as simple as economics. Boycott any and all industrial users of machine logic you don’t want to see developed. Pull the plug!

Remember when Y2K was going to send jets crashing into the ground? Computers are the most over-hyped tool ever invented.

The borg have entered this space.

Folks fear it because it’s reasonable to fear a potentially world shattering technology.

I don’t understand the fear of knives in movies and media. It’s just a tool.

Artificial Intelligence would be great if it were not in the hands of greedy people.

True, but then again, who isn’t greedy, at least occasionally? More to the point, who if anyone can we trust to handle AI so the “phobia versions” don’t become reality? (Cue the opening of another can of worms…)

The direction and development of all technology, including AI; should be democratically mandated and for the benefit of the humankind, the tools of technological progress should be in the hands of the workers.

A young lawyer I know signed up in order to give himself an opportunity to test the capacities of AI, to see what kind of professional competition it really offered.

He had been mildly—not quite alarmed, more than merely interested—by all the hype, so he shelled out a little money, fed in a hypothetical case, and got back a flood of case law and settled precedent.

There was just one problem: it was all completely, and not terribly plausibly, invented. Bogus. It might have fooled some outraged amateur, seething over a settlement and looking to challenge it in court, but it was too clumsy to fool anyone who knew anything about the law.

He said that was the moment he stopped worrying about AI. It’s not without the potential to cause some harm and confusion, especially in the political sphere, but it’s not the Next Big thing any more than the Last Big Thing turned out to be the Next Big Thing.

A chatbot like ChatGPT or Gemini is exactly that, a chatbot. It’s built to predict what humans would respond like or write after being trained on heaps of data. If you use it for anything that’s not in its training, it will make up facts, called hallucinating. It’s not right or wrong, it’s doing its job of predicting what the answer to X question SHOULD look like in a human world.

A specialized tool, on the other hand, is different. Bloomberg trained a custom AI. The aim was not to converse with people. Bloomberg chopped up decades of financial data, fed it to the model. That model can do financial predictions based on your risk profile much better than an individual financial advisor.

The same goes for specialized AI tools made to identify certain diseases (where AI constantly beats real diagnosticians). So, to go to ChatGPT and judge it on a specialized task is not the full picture. In this test, we’re disregarding AI too easily, calling it incompetent. The real use of ChatGPT is to hold a conversion, remember context, so try to treat it as a psychiatrist. It’s likely to have more knowledge than your local psychiatrist.

No company has made a legal AI tool afaik. There’s little incentive right now, as such a lawyer won’t be admitted in the court, meaning there’s no profit to be made here by selling this.

It’s not the increasing ability of AI that is the problem; it’s the decreasing ability of the average human to be useful to their places and each other when artificial authority (overt or covert) continues a trend started by religions.

When the internet was new in the 90’s like AI is now, the were tons of internet is scary movies. Hackers, The Net, etc… It’s just the cycle of things.

That is a false equivalency plain and simple. The internet created a whole new class of workers, well paid workers in positions that did not and could not exist without the internet. So kind of exactly the opposite of what a.i. is doing.

The internet is scary. Yeah, it’s not a black and white thing, but you’re deluding yourself if you think there are no negative aspects to the internet and online culture. Cyber bulling, scams and extortion, the legitimization of hate groups, people sharing images of abuse, copyright violations, plagiarism, cyber-stalking, the deep web.

There’s loads of positives with the internet, and that’s worth remembering, but there are horrific aspects to the internet that 90s culture would’ve never predicted.

It’s a pity that AI has become the subject of hype, conspiracy theorists and media clickbait.

What is called AI can vary between large scale machine learning, and an application that can look as though it’s intelligent when answering queries. AI currently is only a threat to the jobs of those at the end of a phone link, or an online chat, who are employed to answer queries or sell products. It’s not intelligent under any reasonable definition of intelligence, unless what we call intelligence is simply sophisticated pattern recognition.

The problem is that a lot of human beings are stupid, naive and credulous, and if this hype continues we will get crazed mobs breaking into computer centres to destroy the machines which they believe will take over the world.

This is the common misconception that today’s AI is just a glorified autocomplete. We’ve been using things like chess AI for years, haven’t we? See, the problem is not about generalizing context, pretending to reason, or even the social evils (deepfakes, political misinformation, etc. because legislation can solve those issues). The real issue is the way a model works. For writing text, a generative AI models is trained on top-notch text already written by humans beings from literary greats to Reddit internet warriors. Now, it can write in any tone or style with the intended depth. Ergo, professional writers have a hard time. A recent BBC article narrated the tale of a 60-member team getting downsized to 1 person, who now oversees text that the AI created.

Train it on programming, and it can code better than humans. Result? Mass layoffs in IT companies. Train it on art, train it on music, train it on videos, and it can do that too. There are news reports of uncanny results and corporate layoffs in all these fields. Music labels sued those new AI music generators, for example, that can create Taylor Swift songs that Taylor Swift has never sung. Deliver the same pop-blast and catchy tunes. To substantiate this claim, I’d like you to check the tracking document that covers all top companies in the world (I’ve forgotten the name). It clearly tells that even with huge upswings in profit, layoffs are even greater in the last 2 years. COVID-induced economic slowdown is not to blame anymore.

Now, we see generative AI enter new domains. Weather forecasting, protein synthesis, and even text-to-3D, which basically means even the complex job of a motion graphics artist, medical scientist, 3D modeler, etc. is at risk.

Just bear with me for a second. These are facts – generative AI is entering new domains, even very complex ones. I’d say it’s safe to call something like 3D modeling or writing a fiction novel a very human pursuit. It involes reasonable creativity. If AI can beat humans in these, my question is, is getting hung up on “whether it can really think or just pretend” is not practical. Yes, it has no mind, isn’t actively weighing ethics, isn’t really feeling. But it’s doing a better job. The material world respects efficiency (a little too much).

If generative AI tools become too advanced, permeate all creative domains, totally overwhemly not-so-creative domains like live chat support, and are growing at a breakneck speed getting better each iteration, saying “AI currently is only a threat to the jobs of those at the end of a phone link, or an online chat, who are employed to answer queries or sell products.” is objectively wrong. And my main gripe is with the next sentence, “It’s not intelligent under any reasonable definition of intelligence” as it deflects people’s attention from the real concern – generative AI causing layoffs – and reduces it to a philosophical question. It’s not intelligent, I give you that. But that doesn’t mean we dismiss AI as hype. It might not be the best way to look at it.

Awareness equals legislation. Companies are churning out new models at a speed we simply cannot legislate for. New AI labs and venture capitalist funded startups are finding new ways to chop data and enter a new domain. It was extremely easy to enter the domains of text and image generation. The data is very easy to chop. Something like programming is more difficult. But when they are building models that are trained on music and videos, this means it’s not that hard to chop and tokenize even such complex stuff for training purposes.

And once someone finds a way to tokenize some kind of data (weather data, protein synthesis, financial market movements, finding loopholes in legal defense, Disney animation movies, and so on), AI can do a better job than many, many humans together given enough training time. This is pretty much what’s happening. Identifying Alzheimer’s or finding out what makes a Hayao Miyazaki movie work, this will allow the AI to do both of those things better. Someone just needs to make a specialized tool and tokenize the data. Data centers used for training are cheap anyway.

I don’t know about human extinction but AI looks capable of making a very big impact on the way we live. At present I’m reminded of the early days of the World Wide Web – there were exaggerated expectations of immediate change which didn’t come off, and the cynics then felt vindicated – the internet was mainly hype. But, for good or bad, it wasn’t.

Keep in mind that AI is being trained by the information scraped off the World Wide Web. We know it’s biased.

The danger with AI is not as an independent being but as a weapon in the hands of politicians and other servants of the ruling capitalist classes.

So far, the big concern about Artificial Intelligence is what it can be used for in the hands of natural stupidity. We need better people!

The killer robots are coming.

Have a look at Boston Dynamics and the DARPA organisation. And that’s what not on the secret list. The automatons are dancing just like people, amazing!

But that’s not all they can do, the dancing is to disguise these uncanny contraptions abilities, give them a gun and see what happens.

So-called AI is just memory, and even then it never remembers its mistakes.

Can we at least do Star Trek instead of Terminator 2? Red Dwarf’ or ‘Hitchhikers guide to the galaxy’ would be more entertaining.

Great to see this article live on the site! It was a pleasure to edit, and I’m looking forward to see what you write next!

AI is being bandied around with few people really understanding what it means.

Just one question about this whole AI thing: How do I opt out if I don’t want any part of my life controlled by AI?

My instinct is to say you can’t, but there is usually a way to opt out of these things. It may just be more complicated than you want to deal with. It’s like wanting to opt out of the Internet or public school. Can you? Yes, absolutely. Is it physically, mentally, and emotionally costly? Yes, so that kind of decision needs to come with some soul-searching, as odd as that might sound.

IMO, the current talk about “AI” is missing the point.

It is pointless to argue about intentionality or supremacy of a mechanical system, even a mechanical system that we regularly modify. Any intentionality that rightly may be assigned is the intentionality assigned to the developers (a la Chomsky).

The real problem is people making these mistakes of assigning intentionality, feeling, or correctness to systems that they do not understand. It is our propensity to assign these qualities that is the problem. There is no such intrinsic problem in the technology itself.

AI in itself is fine its the people that will own those that are the issue, they won’t be using it to improve peoples lives, they will be using it to fill their pockets, pay less tax, drain the last of the assets/resources from the rest of us and the planet, vanity tech projects/moving to another planet/moon and trying to engineer immortality for themselves. where is all the additional energy coming from in terms of computing power etc…

Is nobody concerned by the effects that automation will have on society?

Is nobody asking themselves why companies are investing so much in AI? In a time where inequality is already so high.

Actually the collapse is already underway, nothing to do with the AI though.

The collapse has to do with dwindling resources like fresh water and dying habitats and ecology.

AI is a nice distraction to prevent anyone what you noted.

The party is over.

Sci-fi writers are, ironically, the most anti-technology people out there. They imagine the future, then turn it into the villain to create drama for their stories.

Extrapolation. It’s not some random decision by writers. It’s seeing trends and understanding possible future impact. It’s worth paying attention to.

They’re writers, not prophets. The decision is to create a compelling drama. But if you’re using them to look to the future, you’re gonna have a bad time.

Remember when Orson Scott Card wrote one of the best psychological sci-fi novels of all time, and in it he thought that two people would be able to take over the world by having amazing arguments in website comment sections?

AI is intended to replace human intelligence and labour. Its development is funded by people whose wealth is assured – and will massively increase upon implementation. If that is not you, AI is a threat.

I think we can embrace AI whilst still regulating how it is used.

The apocalypse isn’t here right now, however, let it run it’s course according to the principles of aggressive capitalism and in ten, or twenty, years hence it may well be so.

A really interesting discussion with some great research points. Thanks for sharing, but also not thanks for now implicating me to the great evil to come.

Not only TV shows and movies about AI, I find the entire Sci-Fi fiction genre, both in books and film/TV, is overall marked by a pessimism about the future. Humans are both obsessed with new technology of the future and fearful of their own demise because of technology. I wonder what the hidden truth there is beneath that psychological paradox.