Issues of Consent, Representation, and Exploitation in Deepfake Pornography

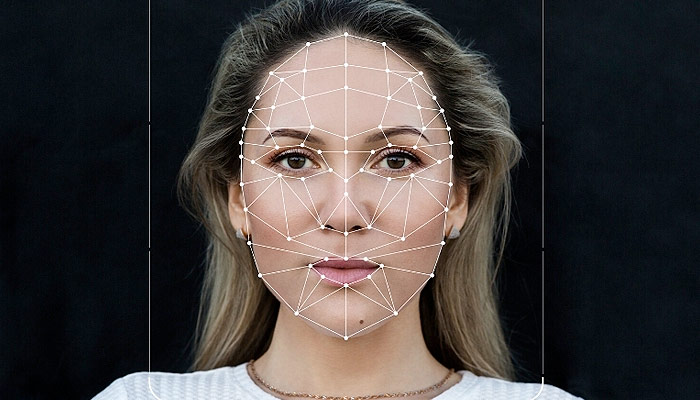

Deepfakes are a kind of synthetic media that use machine learning to create an artificially manipulated image or video with someone’s likeness or in some cases, an entirely artificially generated person. 1 Its name derives from a Reddit user of the same name, 2 though it has also been said to be a portmanteau of “deep-learning” and “fake.” 3 Tyrone Kirchengast explains the mechanism behind deepfake technology as follows:

It allows users to superimpose existing images and videos onto source images or videos using a machine learning technique or programme. In order to create a highly believable final manipulated image the technology must be able to determine the data points to match the image to the final manipulated one. 4

In other words, a large database of source images and videos must be made available in order for deepfakes to appear realistic. A machine learning algorithm uses this database to create a video or image that, through an immense amount of data points, looks believable, moving as if a human would move. As such, most often, source images are of those who can easily be found online: celebrities and politicians.

These media have been steadily rising in both their infamy and popularity in recent years; on learning what they are, one could imagine a number of purposes for which deepfakes could be used, some nefarious, others harmless. In general, a few uses come to mind when deepfakes are talked about today: parody, politics, and pornography. In humorous uses of deepfakes, the absurdity of the artifice is generally the source of the humor. For instance, the notion of seeing Nicholas Cage’s likeness appearing in a number of films is inherently funny. 5 Humorous uses of deepfakes are seen as, for the most part, harmless. Like any other piece of parody, imitative, or satirical work, these kinds of deepfakes are often protected by law if under the nomenclature of “parody,” 6 as they are usually not explicitly out to deceive nor damage anyone (not any more than, for instance, the damage that political comics do to the reputation of politicians). This, however, cannot be said of deepfakes’ applications in politics and pornography.

News media often construe deepfake’s largest problem as how it challenges truth in politics. 8 The problem with political deepfakes is generally understood as its ability to misrepresent politicians with fraudulent videos and statements, which is then in turn portrayed by news media as a threat to democracy. While this is true, Sophie Maddocks argues that this comes at a cost to how the threat of deepfake pornography is perceived: “fake porn is lumped together and played off as mere ‘speech’ – perhaps distasteful, but not damaging. Conversely, political deep fakes are seen to possess the power to dupe voters, steal elections, and undermine democracies.” 9 Political deepfakes are problematic, that is true. But deepfake pornography, as will be discussed in this article, holds just as harrowing consequences, and in the vast majority of the time holds these consequences for marginalized genders and groups. Similar to Maddocks, Chandell Gosse and Jacquelyn Burkell find news media reporting that “the threat presented by deep-fakes pornography is less serious, less genuine, and less significant than the potential political consequences of fake news and disinformation.” 10 This article attempts to follow these projects in providing a larger platform for the problematics of deepfake pornography.

Defining Deepfake Pornography

Deepfake pornography, in its most technical sense, turns the deepfake process pornographic by typically superimposing one face onto the body of adult performer. In 2019, about 96% of all extant deepfakes were of deepfake pornography, 11 which further calls into question the fact that their issues have been de-prioritized for those of political deepfakes. Though obvious, it is also necessary to state that most deepfake videos use women’s faces and bodies. 12 Therefore, deepfake pornography, following most pornography, largely targets women and perpetuates an already-existing power imbalance, wherein men hold the gaze and women are objects to be looked at.

Deepfake pornography follows another tradition commonly referred to as “revenge pornography,” the act of sharing private sexual materials. 13 As Maddocks argues that the term “revenge porn” reduces harms of sexual violence and image abuse to “a simple ‘scorned ex-boyfriend’ narrative,” 14 this article uses the term “image-based sexual abuse” to reflect the more glaring reality of this genre of pornography. In this form of abuse, deepfake pornography, like celebrity nude hacks, sex tape leaks, and all forms of image-based sexual abuse, are closely related because of their disproportionately gendered product (of acts that men do to women), 15 their spread through sharing online, and their inherent non-consensual nature.

Non-Consent and Deepfake Pornography

Non-consent in deepfake pornography is twofold: a lack of consent from the adult performer and from the face superimposed (oftentimes a celebrity). For the adult performer, their labor is very clearly being shared without recognition, dignity, or monetary compensation. Their body exists as a site on which another face is superimposed, only useful insofar as their figure closely matches the person whose face has been placed on their body. On websites where requesting for deepfake pornography is common, makers commonly tell individuals to find adult performers whose body “matches” their request. 17 Indeed, these adult performers have any shred of subjectivity or dignity stripped as they exist entirely as an object to be “erased, edited, and recirculated without their consent.” 18 These sex workers, who are “digitally decapitated,” 19 are exploited. In deepfake pornography, adult performers gain nothing from the work they have done. Already, without concerning the issues surrounding consent and representation, if deepfake pornography enables a product without, at the very least, pay to every contributor, are its makers not plainly exploiting labor? Once issues of consent—that is, the adult performer’s lack thereof for their inclusion in the video—are considered alongside this exploitation, the headless body is cause for deep concern.

For the face superimposed, a number of consent-related problems surface. To be clear, however, this is not a “problem of consent.” Rather, to hold a normative view, these are problems deriving from the initial act of non-consent. Though there might be discussions surrounding the legitimacy of consent in deepfake pornography, anyone sympathetic to the situation of those whose faces have been thrust into deepfake pornography generally intuitively see something wrong with a person’s image being used in a sexual act to which they have not consented. Those who do not think consent is relevant in deepfake pornography generally subscribe to the idea that ultimately (especially in the digital age) no one holds the right to their image and its reproduction. A comment on Reddit’s “r/changemyview” illustrates this view:

I think the way these things are compared to ‘involuntary pornography’ is completely absurd. The argument for banning them seems to rest on a premise that I fundamentally reject: that you own your likeness. But you don’t – I can take a picture of you without your consent and use it for whatever purpose I want. So, these things shouldn’t be banned, let alone outlawed – they’re just a natural progression of technology. In 20 years I’ll be able to have virtual reality sex with your wife, or your teenage daughter, or YOU, or whatever, and you won’t be able to do a damn thing about it, and that’s as it should be. 21

Rachel Winter and Anastasia Salter discuss the problem with this comment and the mentality at large: first, they (the commenter and the argument’s proponents) believe that initial consent for an original image transfers to any way that image is further used. It is clear why this is untrue—it is unlikely that, if everyone knew that their face would be used in deepfake pornography, they would consent to this use or the original image. Second, however, Winter and Salter also discuss how this justification fits into the practice of image-based sexual abuse. Simply, in this line of thought, victims of this type of abuse should never have been so “promiscuous” or shown off their image sexually in the first place. This type of morality causes the justification for image-based sexual abuse to somewhat paradoxically take on a “puritanical spin” 22(paradoxical because their framework would imply that image-based sexual abuse is equally “immoral”).

The face of whoever is being portrayed in deepfake porn has to undergo significant social and emotional damage. It is not always a celebrity being portrayed either, as “social media creates optimal conditions for the potential creation of deepfakes that feature anyone.” 23 As deepfake pornography requires a large dataset of photos, the threat of using photos solely from social media to create a realistic final product may still be overestimated. 24 Nevertheless, it still remains a real threat that can serve to harm (most often) women.

That women’s agency and bodily autonomy are harmed is self-evident. Through deepfake pornography, women run the risk of having these videos be thought of as real, causing a shift in how people perceive them, a shift to which they have not consented. Further, however, it is apparent that watching their body do something that they have not done, and would not have done, takes away their agency (and consequently their dignity). This is not a question of the ethics of non-deepfaked pornography. Rather, it is the fact that victims of deepfake pornography see themselves perform a (to some) deeply intimate act. Though not technically their body, most deepfakes seek to make that line as thin as possible. (Further, what of the damage this does to the self-perception of one’s body?)

Many deepfake pornography makers make it clear that the video is fake; 25 this, however, does not take away from the points made, as (1) the knowledge that the video is fake does not limit the ability of decontextualization; individuals may still send it into communities in which that knowledge is not apparent. And (2), there is no recovering the sense of agency lost by seeing yourself perform an act to which you did not consent, which is compounded with (3) the issues of exploitation that arise for the adult performer. The amount of harm underwent by the initial discarding of consent on the part of deepfake pornography makers cannot be understated.

In 2018, journalist Rana Ayyub was part of a “deepfake porn plot,” in which “far right trolls” had circulated a deepfake pornography video involving her face. She was subject to harassment, humiliation, and doxxing in the form of having her phone number leaked. The amount of stress she went through necessitated a trip to the hospital. 26 The consequences of deepfake pornography, again, cannot and must not be understated. Any notion that the problems of political deepfakes deserve a larger platform than those of deepfake pornography speak to a tendency in the public sphere to shove aside issues of bodily and emotional harm done to women in favor of what are considered “real” issues, those of the “politics.” But women’s bodies are political, and the harm done through deepfake pornography requires a significantly larger platform. The question of consent in image-based sexual abuse, especially as it appears in deepfake pornography, must be reckoned with.

Avenues for Victims of Deepfake Pornography

The routes to fix the damage that deepfake pornography can cause are murky, and often, none of them suffice to fully compensate those who have already underwent harm. In the United Kingdom, producers of deepfake pornography can be prosecuted for harassment. 28 Megan Farokhmanesh, on The Verge, says that in the United States, “[a]lthough there are many laws that could apply, there is no single law that covers the creation of fake pornographic videos.” 29 It is clear that the kind of crime of which deepfake pornography is a part is not “new or revolutionary”; rather, it belongs to a larger family of intimate sexual abuse that women have been victims of before, simply in other forms. 30

There have been calls to introduce laws specifically targeting deepfake pornography. In the UK, image-based sexual abuse is a criminal offence, 31 and recently, reforms to laws have been proposed to better protect victims of the crime. 32 In the US, the SHIELD act, a bill that has since passed the House of Representatives, 33 aims to better provide protections for victims for image-based sexual abuse. However, it seems as though for now, only political deepfakes are regulated, and any state that has enacted statutes to do with deepfake pornography “tended to use language that is inconsistent, does not provide full individual protection, or does not impose liability on the producer or distributor of the content outside of specific circumstances.” 34 In the final analysis, however, laws can only do so much to combat the real damage done by deepfake pornography. As Farokhmanesh says, “there are no legal remedies that fully ameliorate the damage that deepfakes can cause.” 35 After all, these laws only become relevant when the damage has been done. Despite compensation or protection for the victim, these measures only become necessary when the crime has been committed, and the victim in question has become a victim.

Beyond these laws, then, there must be other options for those harmed by deepfake pornography. Tyrone Kirchengast says that “[b]efore a rush to criminalise or regulate, it is important to acknowledge that deepfakes may facilitate free speech and fair social and political comment, and can be used for entertainment.” 36 If deepfakes as a whole do have use beyond pornography, and victims of deepfake pornography have legal forms of protection after harm is done, then how can harm be prevented from occurring?

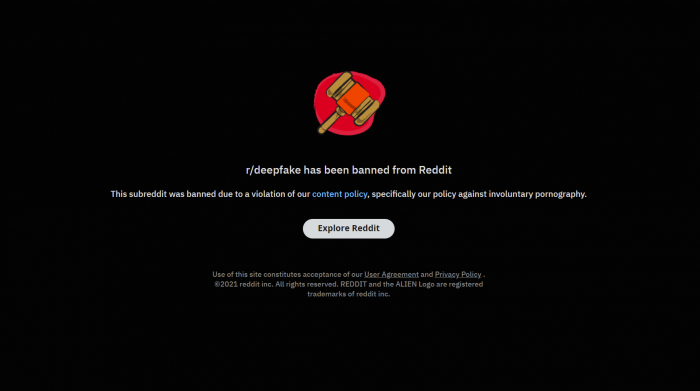

One option is, of course, to ban them from social media and popular websites. Reddit, where deepfakes and deepfake pornography originated, has done this, 37 and many social media sites, especially those hosted in countries with stronger regulation against image-based sexual abuse, have taken steps to use community guidelines to ban deepfake pornography. 38 This, however, has led to deepfake pornography being hosted on other video-hosting websites (albeit ones that are not as popular as social media sites). The consequence is that, as Milena Popova has pointed out, this leads communities of deepfake pornography consumers and creators away from popular websites. 39 While this helps lower the risk of more people consuming or seeing it, this content is still readily accessible and could be appropriated to harm someone, despite the pornography being labelled as fake. A search for deepfake pornography online demonstrates how easily it may still be found.

Another option could be Artificial Intelligence (AI) that detects fake pornography. There has been technology created that detects manipulated digital objects at a 96% success rate, 40 and in general the notion that we can use AI to police AI is popular among media companies, as evident with algorithms that detect content on a myriad of extant social media sites. However, this is a “computational arms race.” 41 As one side of technology improves, the other does too. Consistently improving deepfake technology might spell trouble for the future of victims of deepfake pornography.

Consequently, what seems necessary is ultimately a combination of governmental legislation and regulation, initiatives on the part of tech companies, AI, and cultural awareness. As has been said at the beginning of this article, deepfake pornography has been subordinated in the legitimacy of its threat to the threat posed to the political sphere. Once again, however, the victims affected by the harms of deepfake pornography are political victims inasmuch as they are emotional and digital victims. Image-based sexual abuse has legitimacy within the political sphere, and the fact that this abuse typically affects women yet is subordinated to other issues reflects a persisting cultural mentality wherein abuse related to women are seen as less pertinent. If we are to recognize and give equal ground to all victims, it seems necessary to publicize, read about, write about, and speak about the problematics of deepfake pornography.

Works Cited

- https://mitsloan.mit.edu/ideas-made-to-matter/deepfakes-explained ↩

- Ibid. ↩

- https://www.foxnews.com/tech/terrifying-high-tech-porn-creepy-deepfake-videos-are-on-the-rise ↩

- P. 310, Tyrone Kirchengast (2020) Deepfakes and image manipulation: criminalisation and control, Information & Communications Technology Law, 29:3, 308-323, DOI: 10.1080/13600834.2020.1794615 ↩

- https://www.youtube.com/watch?v=BU9YAHigNx8 ↩

- Liebler, Raizel. 2015. “Copyright and Ownership of Fan Created Works: Fanfiction and Beyond.” The SAGE Handbook of Intellectual Property, edited by Matthew David and Debora Halbert, 391–403. London: SAGE. ↩

- https://www.youtube.com/watch?v=rvF5IA7HNKc ↩

- See: https://www.brookings.edu/research/is-seeing-still-believing-the-deepfake-challenge-to-truth-in-politics/, https://thefulcrum.us/deepfake-political-video ↩

- P. 418, Sophie Maddocks (2020) ‘A Deepfake Porn Plot Intended to Silence Me’: exploring continuities between pornographic and ‘political’ deep fakes, Porn Studies, 7:4, 415-423, DOI: 10.1080/23268743.2020.1757499 ↩

- P. 507, Chandell Gosse & Jacquelyn Burkell (2020) Politics and porn: how news media characterizes problems presented by deepfakes, Critical Studies in Media Communication, 37:5, 497-511, DOI: 10.1080/15295036.2020.1832697 ↩

- P. 353, Kristjan Kikerpill (2020) Choose your stars and studs: the rise of deepfake designer porn, Porn Studies, 7:4, 352-356, DOI: 10.1080/23268743.2020.1765851 ↩

- https://www.jurist.org/features/2021/04/06/explainer-combatting-deepfake-porn-with-the-shield-act/ ↩

- https://psychcentral.com/blog/what-is-revenge-porn#1 ↩

- P. 341, Sophie Maddocks (2018) “FromNon-consensual Pornography to Image-based Sexual Abuse: Charting the Course of a Problem with Many Names”, Australian Feminist Studies, 33:97, 345-361, DOI: 10.1080/08164649.2018.1542592 ↩

- P. 399, Olivia B. Newton & Mel Stanfill (2020) “My NSFW video has partial occlusion: deepfakes and the technological production of non-consensual pornography”, Porn Studies, 7:4, 398-414, DOI: 10.1080/23268743.2019.1675091 ↩

- https://www.indiatimes.com/technology/news/hundreds-of-people-are-now-making-fake-celebrity-porn-thanks-to-a-new-ai-assisted-tool-338457.html ↩

- P. 354, Kristjan Kikerpill (2020) Choose your stars and studs: the rise of deepfake designer porn, Porn Studies, 7:4, 352-356, DOI: 10.1080/23268743.2020.1765851 ↩

- P. 416, Sophie Maddocks (2020) ‘A Deepfake Porn Plot Intended to Silence Me’: exploring continuities between pornographic and ‘political’ deep fakes, Porn Studies, 7:4, 415-423, DOI: 10.1080/23268743.2020.1757499 ↩

- https://thewalrus.ca/the-double-exploitation-of-deepfake-porn/ ↩

- https://thewalrus.ca/the-double-exploitation-of-deepfake-porn/ ↩

- qtd in p. 387, Rachel Winter & Anastasia Salter (2020) DeepFakes: uncovering hardcore open source on GitHub, Porn Studies, 7:4, 382-397, DOI: 10.1080/23268743.2019.1642794 ↩

- Ibid. ↩

- P. 502, Chandell Gosse & Jacquelyn Burkell (2020) Politics and porn: how news media characterizes problems presented by deepfakes, Critical Studies in Media Communication, 37:5, 497-511, DOI: 10.1080/15295036.2020.1832697 ↩

- P. 424, Emily van der Nagel (2020) Verifying images: deepfakes, control, and consent, Porn Studies, 7:4, 424-429, DOI: 10.1080/23268743.2020.1741434 ↩

- P. 376, Milena Popova (2020) Reading out of context: pornographic deepfakes, celebrity and intimacy, Porn Studies, 7:4, 367-381, DOI: 10.1080/23268743.2019.1675090 ↩

- https://www.indiatoday.in/trending-news/story/journalist-rana-ayyub-deepfake-porn-1393423-2018-11-21 ↩

- https://www.washingtonpost.com/people/rana-ayyub/ ↩

- https://www.huffingtonpost.co.uk/entry/what-is-deep-fake-pornography-and-is-it-illegal-in-the-uk_uk_5bf4197ce4b0376c9e68f8c5 ↩

- https://www.theverge.com/2018/1/30/16945494/deepfakes-porn-face-swap-legal ↩

- P. 347, Sophie Maddocks (2018) “FromNon-consensual Pornography to Image-based Sexual Abuse: Charting the Course of a Problem with Many Names”, Australian Feminist Studies, 33:97, 345-361, DOI: 10.1080/08164649.2018.1542592 ↩

- https://www.victimsupport.org.uk/crime-info/types-crime/cyber-crime/image-based-sexual-abuse/ ↩

- https://www.lawcom.gov.uk/reforms-to-laws-around-intimate-image-abuse-proposed-to-better-protect-victims/ ↩

- https://www.congress.gov/bill/116th-congress/house-bill/4617 ↩

- https://www.jurist.org/features/2021/04/06/explainer-combatting-deepfake-porn-with-the-shield-act/ ↩

- https://www.theverge.com/2018/1/30/16945494/deepfakes-porn-face-swap-legal ↩

- P. 321, Tyrone Kirchengast (2020) Deepfakes and image manipulation: criminalisation and control, Information & Communications Technology Law, 29:3, 308-323, DOI: 10.1080/13600834.2020.1794615 ↩

- https://www.pcquest.com/reddit-twitter-pornhub-stamps-ai-generated-deepfake-porn-videos/ ↩

- P. 323, Tyrone Kirchengast (2020) Deepfakes and image manipulation: criminalisation and control, Information & Communications Technology Law, 29:3, 308-323, DOI: 10.1080/13600834.2020.1794615 ↩

- P. 375, Milena Popova (2020) Reading out of context: pornographic deepfakes, celebrity and intimacy, Porn Studies, 7:4, 367-381, DOI: 10.1080/23268743.2019.1675090 ↩

- https://www.jurist.org/features/2021/04/06/explainer-combatting-deepfake-porn-with-the-shield-act/ ↩

- P. 499, Chandell Gosse & Jacquelyn Burkell (2020) Politics and porn: how news media characterizes problems presented by deepfakes, Critical Studies in Media Communication, 37:5, 497-511, DOI: 10.1080/15295036.2020.1832697 ↩

What do you think? Leave a comment.

Great article which raises some fascinating points.

Fake news and now Deep Fakes have taught the public to be very sceptical of what they see and hear in the news media. That’s a good thing.

Deepfakes are going to be handled in the same way as Photoshopped still images: They won’t be stopped at all.

People must stop trusting video footage by default like we have stopped trusting still images by default. If a controversial still image emerges, we demand some kind of backstory or evidence that it’s legit before trusting it whatsoever. An image cannot get any traction without provenance.

Precisely. Photos are no more inherently evidential than drawings or paintings.

Without human witnesses to vouch for the circumstances of production and transmission, images tell us nothing.

Back in the 1980s, during an Iran-Iraq war, I walked into the newsagent. In front of his counter were piles of newspapers.

On the front of one was a picture of an infantryman in silhouette advancing through the fog of war captioned ‘An Iranian soldier advances’. On the front of another newspaper was the identical mirror-flipped picture ‘Iraqi in battle .’

Many years later I heard an agency photographer explaining that he had on several occasions, unable to reach the battle front, employed local militia-men to pose for photos in his hotel garden.

You have spoken the truth.

In essence, nothing has really changed. The whole deepfake thing is just a healthy wakeup call that we can’t necessarily trust something just because we’ve seen a video. It has been prefectly possible to create convincing fakes for a very long time now, so long as you had the resources to do so. Modern computing has just put that within the reach of normal people rather than nation-states and corporations.

One of the most impressive things I saw in a movie as a child was in the movie the Magnificent Seven. Yul Brenner told one of the other seven to clap his hands as fast as he could and before he got his hands together, Brenner’s gun was in between them. When I became an adult the VCR came out and guess who did a frame by frame to see how they did that?

It’s another avenue of manipulation.

Most politicians already are deep fakes yet people naively continue to believe they are as they like to appear. The best thing would be to develop some other way of doing politics that does not rely on dishonest, corrupt and totally out-of-touch politicians.

Deepfakes are no big deal. Journalists have been feeding us lies for decades, having those same misrepresentations in the form of videos, instead of words on a page, makes no difference.

Vote AI.

Soon we will have no idea who or what to believe.

I wonder if this technology has arrived in time for my neighbour to fabricate evidence against me like he’s been trying to do for five years. Like the time he called the police and said I had a CCTV camera watching him and his girlfriend in bed.

Can we swap Nic Cage and John Travolta’s face back again?

Better. We can make Nick Cage the star of every film, ever.

I feel like the juxtaposition of this with face-recognition technology is worrying. How difficult would it be for a state – say China— to capture a shot of a person – say a Uighur (not yet in a camp)— and insert him into a video where he appears to be committing a crime?

Exactly this. Maybe not today, but given the exponential growth in technological knowledge, it cannot be far away.

And given that, what next?

It’s all very worrying, very Running Man and could be catastrophic.

The process of editing images to superimpose one face onto another still leaves tiny artifacts in the footage used. Adobe have already done some impressive work in creating an AI-tool that can counter these deepfakes by detecting where an image has been digitally manipulated.

Fun technology being miss-used. Which is what always happens.

The digitization of everything including matters of health and finance has always harboured the possibility of hacking, faking, and rendering dysfunctional.

When voices were first recorded some argued that without seeing the expressions on the faces the listeners could be misled. People adapt and allow for new possibilities.

90% of all communication is non-verbal. You are aware of the phenomenon of people taking audio recordings/the written word out of context, aren’t you? It’s pretty universal. Have you never written anything that was misconstrued? Or have I misconstrued what you were trying to say?

For e.g., you have no idea how my reply to you ought to be taken – ironically, sincerely, sarcastically, pompously? The truth of that is engraved on my facial expression, my body language, tone of voice, unspoken context (the background to our ‘relationship’.) The words by themselves are just the skeleton of my meaning.

Perhaps in future we might use methods of digitally signing video and photo footage with an encrypted key that proves it has been unaltered – i.e any attempt to modify the image from it’s original form breaks the key.

Finally, we can put someone who can actually sing into Javert’s role in the 2012 film of Les Mis.

There is no truth any more.

There is always truth. Always facts, probablilites, contexts and logic.

Nothing is as relative as the relativist apologists want to spin it.

There are also always more lies than truth.

What consumers seem to lack is the faculty of critical thought. They seem unable to listen to multiple accounts, consolidate them, assess on their verifiable factuality and sources, reflect ……. and come up with their own assessment of ‘probable truthfullness’.

Instead they just look for accounts that validate their preconceptions – and those accounts are happily supplied by propaganda. If joe public wants to believe in ghosts, for examples, they just read ghost websites that tell them what they want to believe.

This is a very ‘western democracy’ problem, and – unless we come up with a solution – our gullible stupidicide will end us.

I like that outlook and stand corrected. Critical thinking is very important I reject contradiction and believe in objective reality. however I’m not really sure the problem is confined to western democracy. I think this is more of a human nature type thing. I also agree that our propensity to ignore facts will indeed end us.

Quick note on one of the cited images, that is not Ridley Scott’s face, it is Daisy Ridley’s face that is superimposed on the performer.

The comment from the “r/changemyview” raises a concern in addition to the non-consent. Given that the consumption of underage material is illegal, and that Reddit user claims it is “a natural progression of technology” to eventually be able to have “virtual reality sex with […] [teenagers]”, I cannot help but wonder if it is not until the issue of minors and pornographic deepfakes is brought to the forefront of the public eye, that legislation will actually take some sort of action against it. I can only hope its not something that does nothing about the deepfake issue and only extends to preventing it being about minors.

Great read.

Thanks for pointing out the issue, it’s fixed!

Great point on extending protections, and taking into consideration, minors.

Ridley Scott?

Gosh he sure looks a lot like Daisy Ridley to me deepfake or not.

Haha. Thanks for pointing that out; it’s fixed!

A fascinating article and a good read. Perhaps the one important lesson we can all take from this article is that it’s high time some elements of humanity grew up and stopped behaving like schoolyard bullies. I have a dream…

There is an alternate universe of digital innovation existing alongside our old-fashioned world.

Every now and then, something like deepfakes pokes a hole in the thin curtain separating the two worlds.

And for a brief moment we catch a glimpse of our real masters.

This technology can cause massive disruptions in our societies especially once scammers get a hold of it.

A good article. It will be interesting to see the development of prevention through court cases where this leads. It would seem the platforms would and should be sued and, if forced, to pay significantly for damages, then some changes might take place. Yet, victory, defined as no-one-should-ever-have-this-happen-to-them-again might be too much to expect.

A combination of technology. individual malice, criminal disinformation and state cyberwarfare fills the internet with fake crap (video, audio, text etc).

Just about anything that was invented to do good can also be used for evil.

Technology is pushing us towards the reality that everything is an illusion – Maya.

The solution to fake videos should probably be a combination of technological and societal.

Thank you for any other informative blog.

This is SUCH an interesting topic. I loved your take on it.

Extremely well done.

The line “women’s bodies are political” is an extremely strong one. My basic intuition is to protect free speech unless it directly leads to violence. I do not think that offence should be grounds for censorship. However, this article has shown why the effects of deepfake pornography go beyond mere offence.